Level Design with Intelligent User Interface in Unity

June 5th 2019

Promo Video with Instructions:

Aim of the Project

This project aims to provide new tools to level design, to bring the designer's vision into reality by allowing to design from the player's perspective. These tools are designed to be intuitive and enabling instead of obstructing the creative process like Unity complex UI. There are also more complex commands implemented to increase the tools' efficiency and make experimenting during the design easier.

This project introduces a new multimodal approach to design level in Unity with speech commands and hand gestures. Speech commands to create and edit objects can be given with a natural language, and objects or locations can be selected by pointing with a finger. Gamepad can be used to navigate the camera, select and move the objects.

How to use it

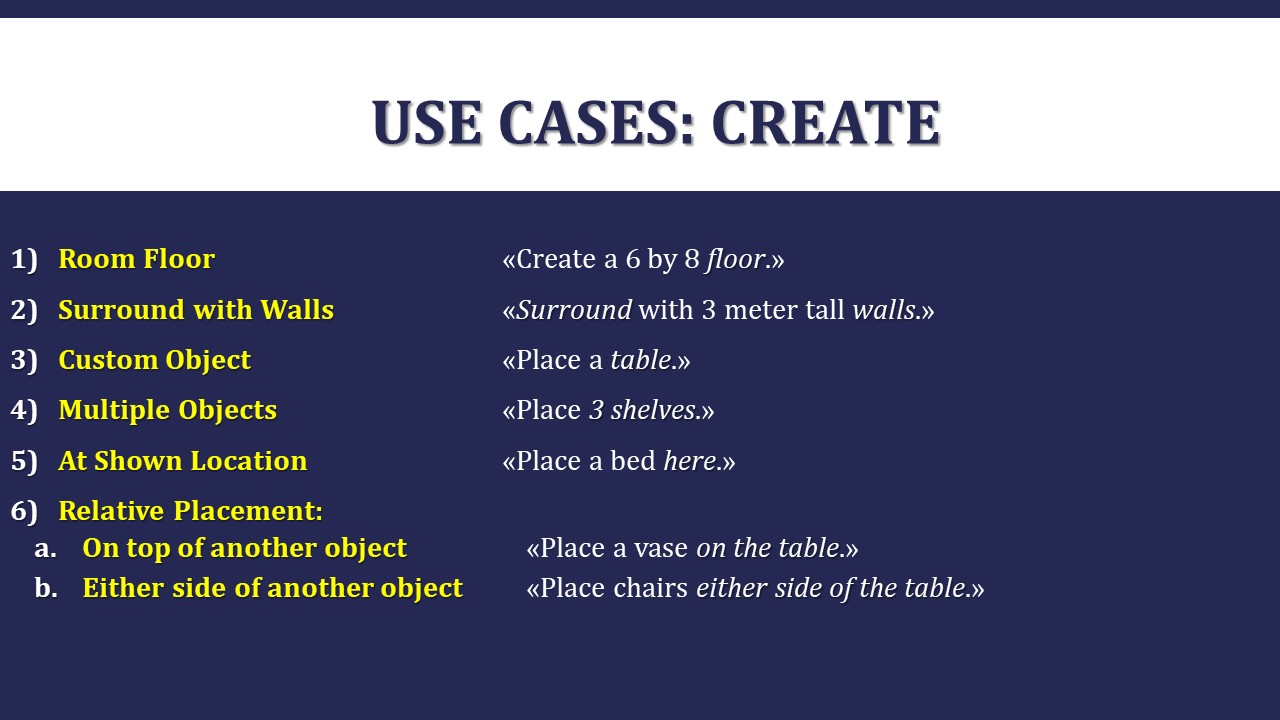

Voice commands have several uses from creating objects to editing their properties. Objects can be created in the default location, or in relative to other objects, or where the user points with the index finger.

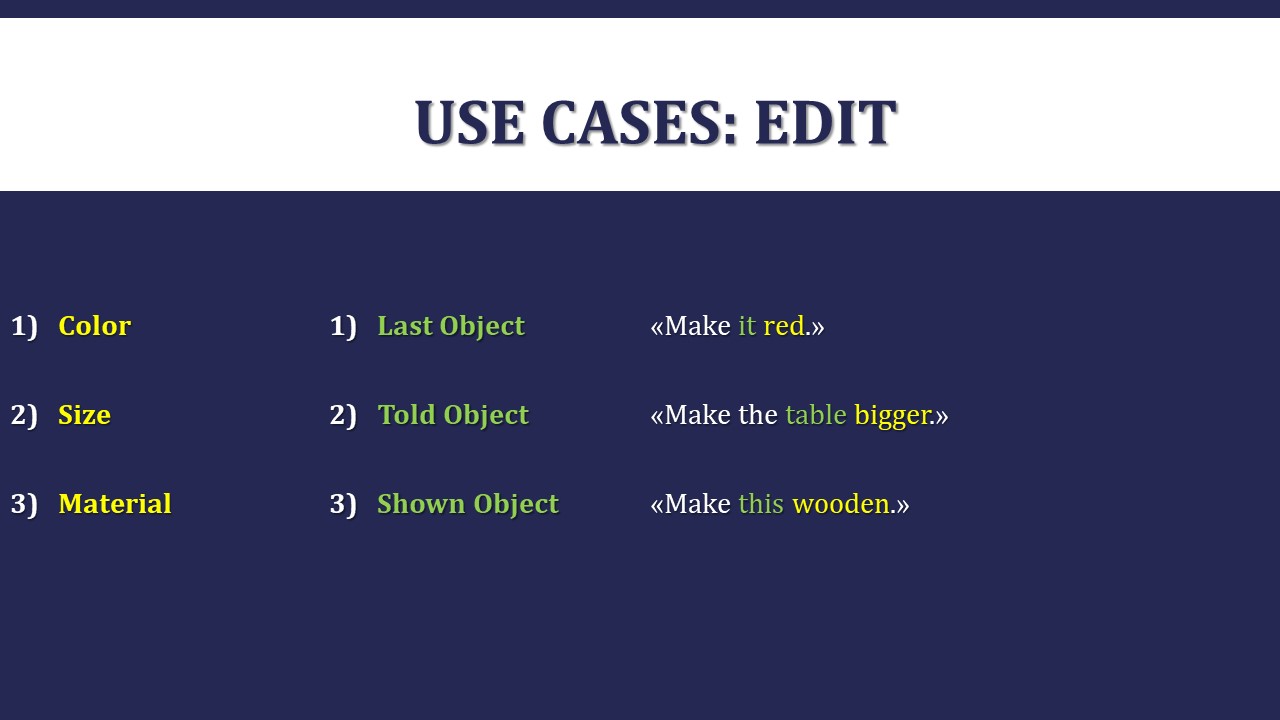

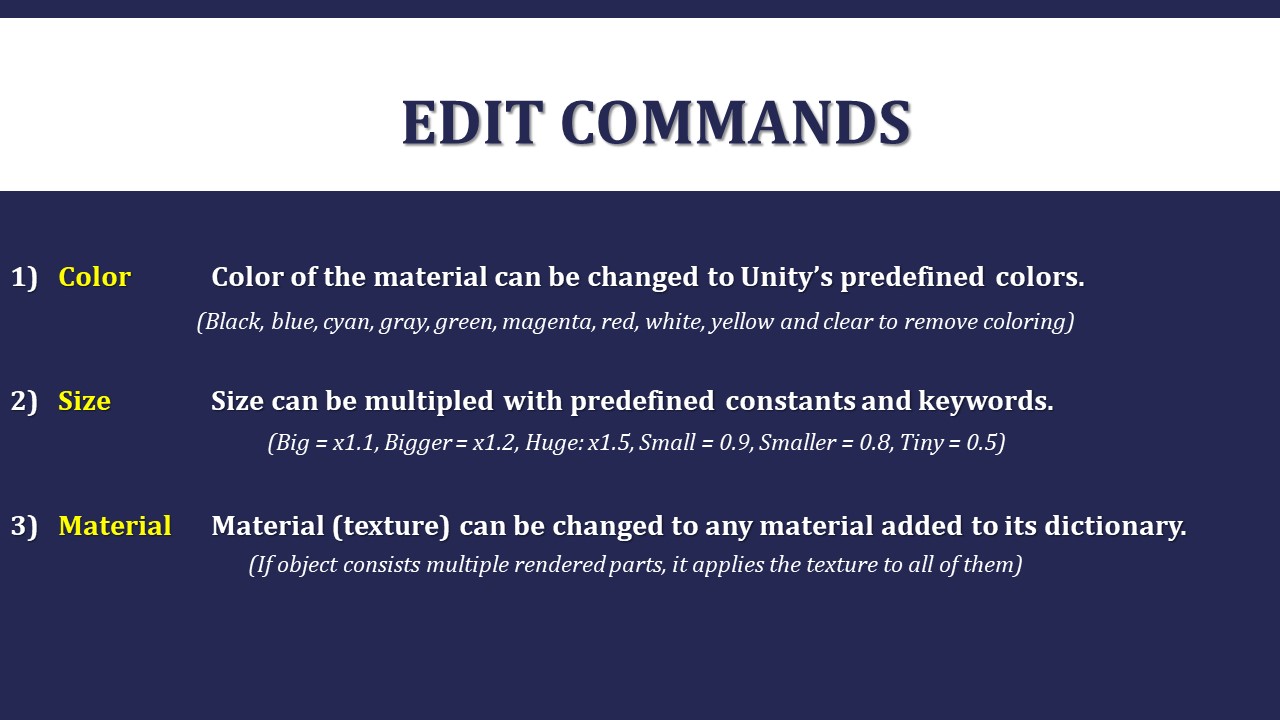

Users can select an object with a gamepad, or verbally pick it (if several ones are on the screen, the one that is closest to the middle is selected), or by pointing at it. Then edit it's several attributes like it's material, size or color.

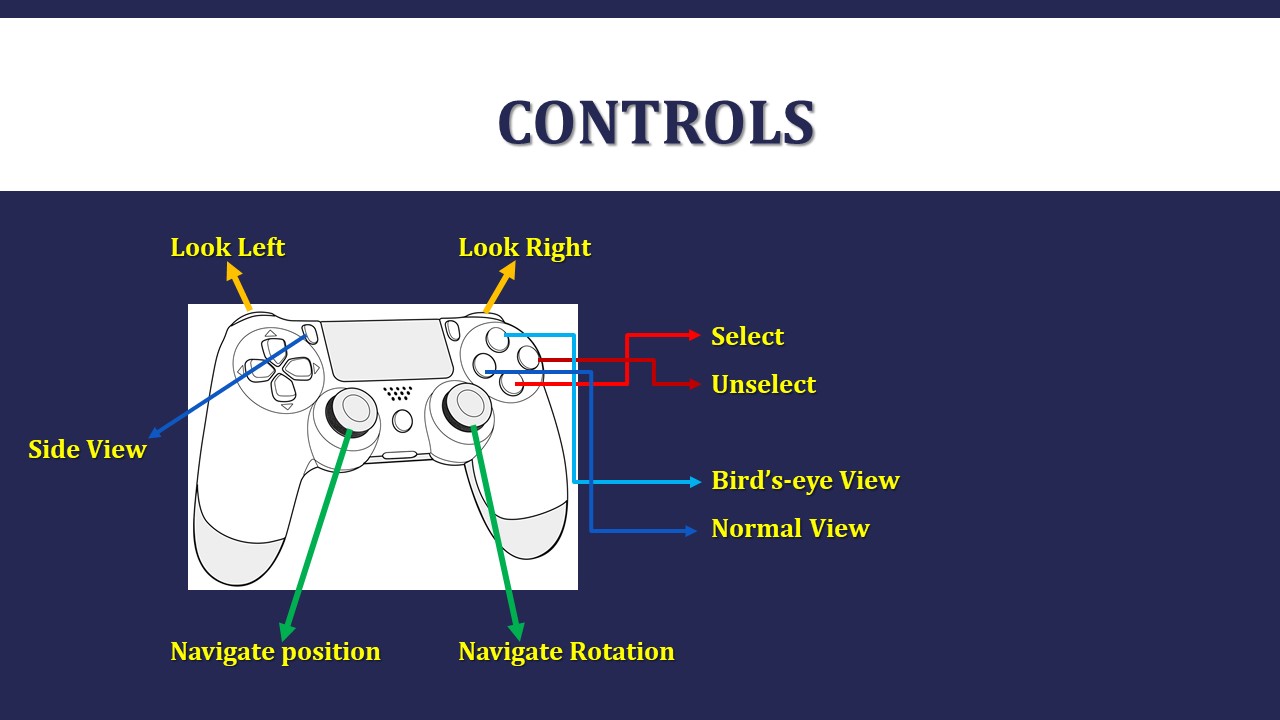

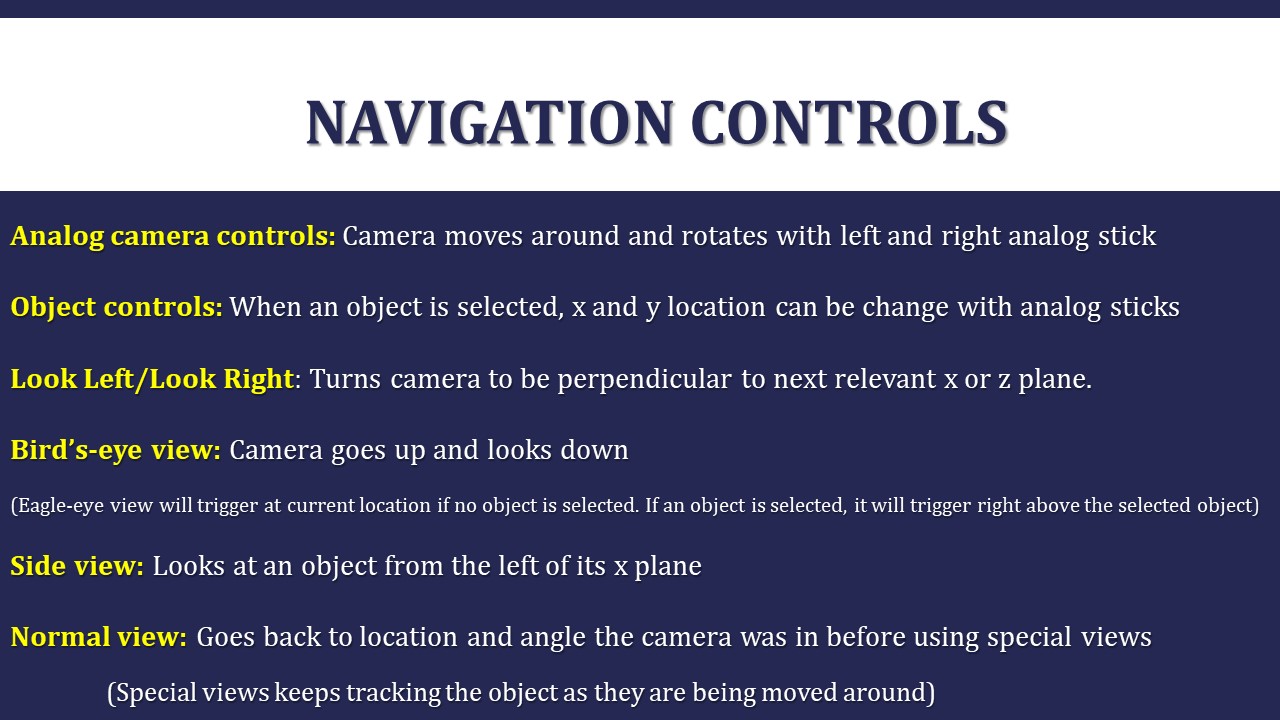

For navigation around the level and controlling the camera, a gamepad is used to keep the design process from the player's point of view. But the perspective can be change to bird's-eye view and side view to make precise placements.

Technical Background

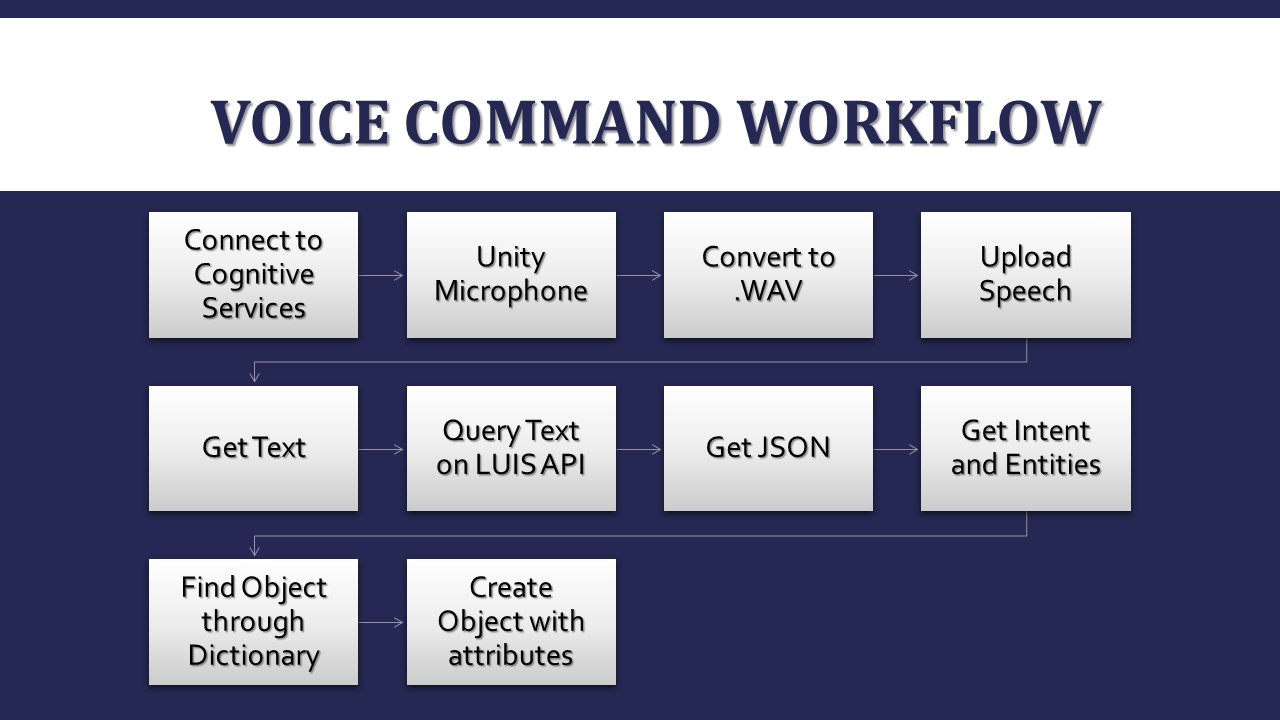

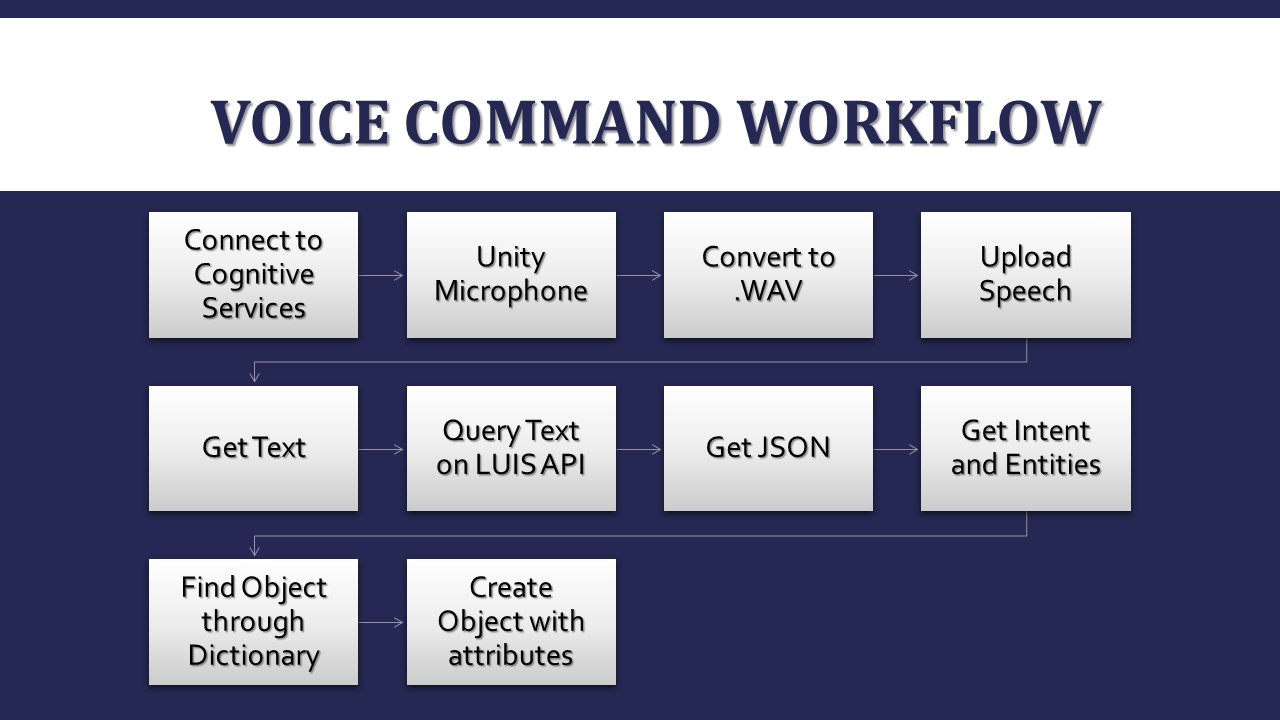

For voice commands, Microsoft's Speech Services are used. Audio is turned into small WAV files and uploaded to Speech Services. This returns a sentence string with a certain accuracy, that is uploaded again for Microsoft's LUIS for natural language processing.

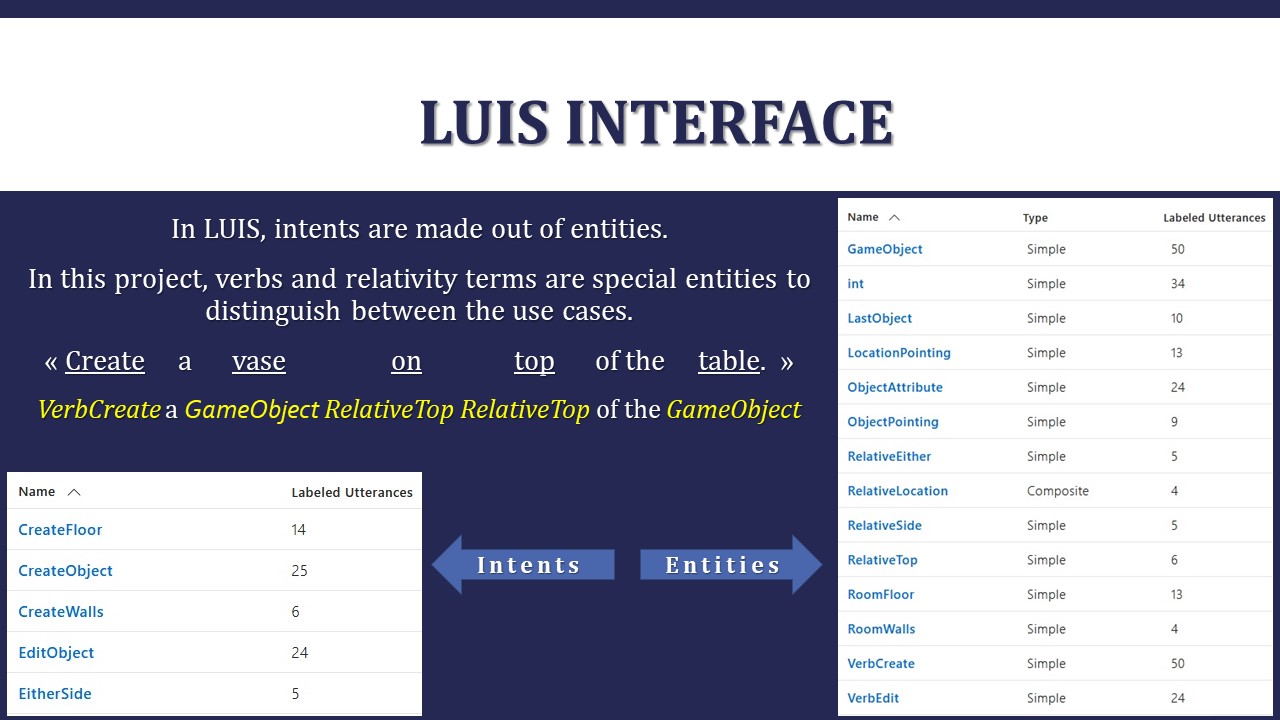

Trained LUIS model finds the intent and determines the entities involved. This service returns a json file with intents and entities, and these determine the use case and the target objects.

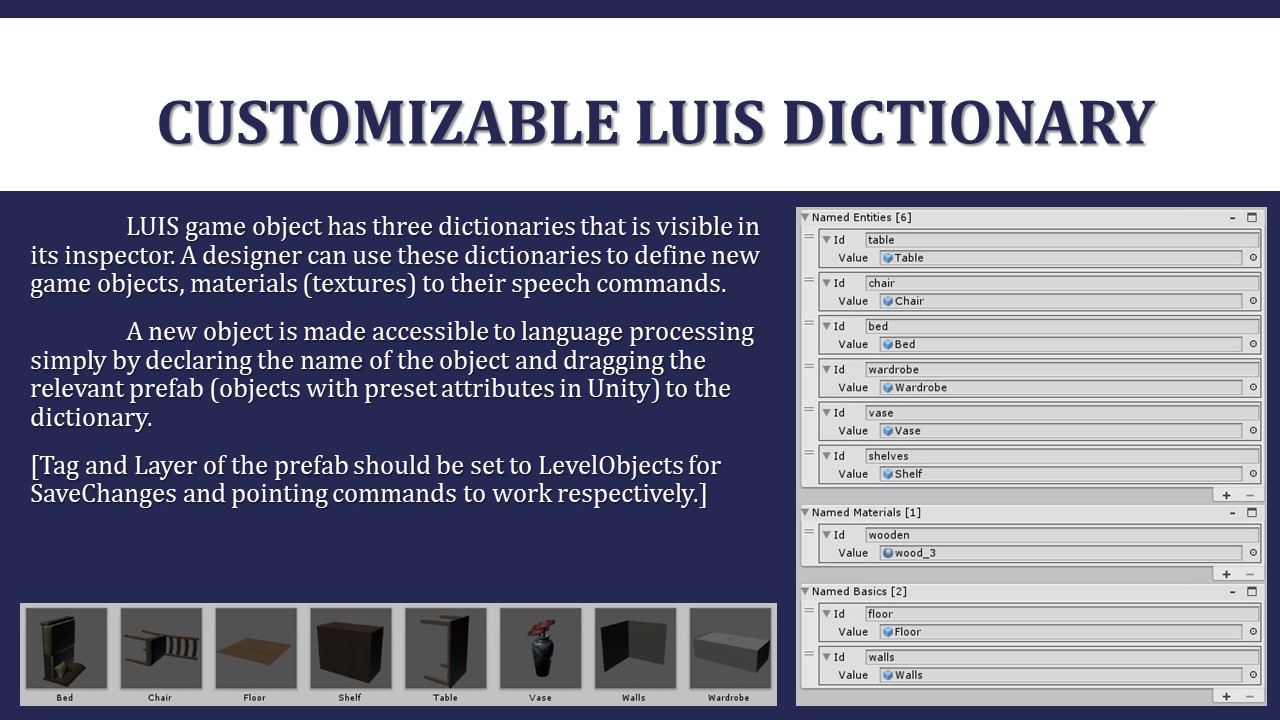

Object entities are returned as a string. Therefore their corresponding prefabs (Unity's game objects) are determined by looking up a string to prefab dictionary.

This dictionary is available in the Unity's native UI, and easily expandable or customized by the designer. Adding a prefab to speech command is done by adding the name of the object then picking the relevant prefab. If that name is in English language, Cognitive Services will guess it correctly and LUIS is trained to work with most objects and furniture so that may work even without training the data again.

Materials use a similar dictionary to be extendible and customizable.

Conclusion:

This intelligent user interface was promising as it did provide most of the necessary tools of level design in a similar effectiveness, also it was intutive, and fun to use. It required some more functionality to be feature-complete. But it did perform well compared to the classic method by taking shorter time and being slightly less precise. Details are in the report file.